Agentic AI in regulated Enterprises is not easy otherwise everyone would be at it..

Agentic AI is a full software development project, and the dream of "easy automation" is why most 95% of poorly formed POCs fail to scale

The promise is glossy: a 20 line prompt, a quick MCP connection to your enterprise data, and suddenly, half your business is automated by multi-agent AI and your costs to serve drop 30-50%.

This is the narrative driving massive investment in the trillions , yet we in the trenches Disseqt knows the truth from live deployments. Agentic AI is a full software development project, and the dream of "easy automation" is why most 95% of poorly formed POCs fail to scale.

The Problem: Why Less Than 5% of Agents Make It to Production

The shiny demos intentionally skip over the operational reality. We’re dealing with technical, governance, and compliance risks that traditional SaaS software I sold and supported for 25 years doesn't present.

The hard data confirms this: The Nanda MIT Report found that less than 5% of Agentic POC’s make it to scale production in 2025.

Why? Because the moment you introduce Autonomy vs. Control and Context Drift & Memory Leakage into a system that handles customer data or critical IT processes, you hit a wall of complexity. You can't safely deploy an agent if you can't trust its decision-making and validate it is actually correct and tested by a third party to the LLM.

The three harsh truths that derail enterprise Agentic AI are:

It’s not deterministic.

It’s not secure by default & MCP is like giving it the api keys to all your databases and PII with full CRUD permissions.

It is not compliant by design without extreme rigor and oversight.

The Root Cause: Agents Behave Like Humans, Not Code

Traditional software is predictable. Same input, same output. Your agents, however, are fundamentally non-deterministic. They reason, plan, and act across systems, changing responses based on context and new information.

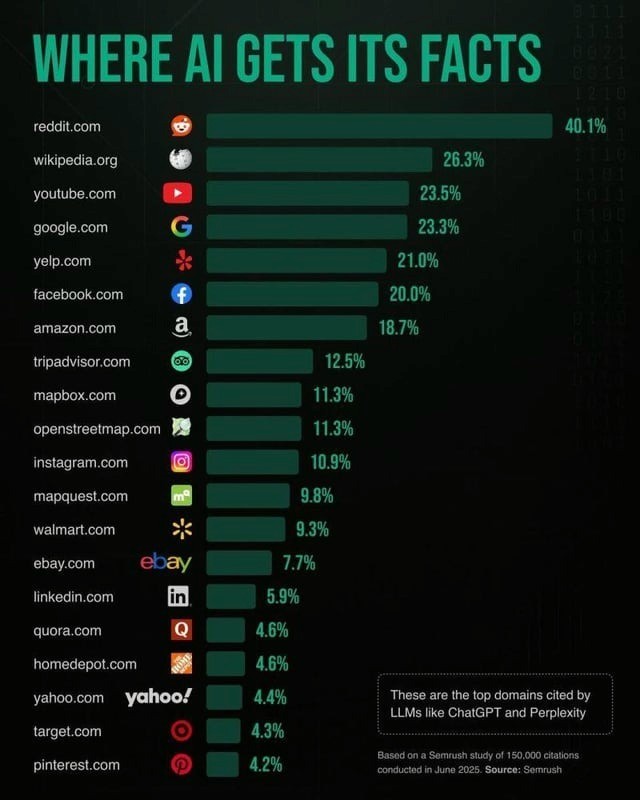

If you ask me the same question three times in three interviews over three weeks I will change the answer depending on what's top of mind that day. With Agentic AI it's what's in the training data that changes daily and guess where that training data came from ?

See below answer from Gemini 3 today 25/12/25

Skewed Demographics: Western countries dominate debates

"Hive Mind" Mentality: Subreddits often develop their own internal cultures and strong opinions, which can lead to echo chambers and a "hive mind" effect.

Amplification of Societal Biases: Studies have shown that data derived from conversational platforms like Reddit can contain and amplify existing human biases related to gender, race, religion, and other social dimensions.

Factual Accuracy: A significant portion of Reddit content is user-generated opinion, speculation, or anecdotal experience, not verified fact

In summary, Reddit data is valuable for LLM training because it provides unique insights into human conversation, but it is not unbiased. Its use requires extensive technical and ethical efforts to filter, curate, and mitigate the inherent biases, subjectivity, and inaccuracies.

That flexibility in answers from the knowledge of the world of humans on the internet is a game changer for answering any question on any topic , but it introduces massive business risk:

Confused Deputy: Does the agent misuse its access permissions?

Auditability & Traceability: Can you explain why the agent took a specific action to a regulator or internal auditor?

Lack of Testing Frameworks: How do you test a system that behaves differently every time?

This is the gap where regulated enterprises and their System Integrator partners get stuck,the weakly architected POC won't scale .

The lie isn’t that Agentic AI exists; the lie is that it’s easy.

The Solution: The Enterprise-Grade Agentic Platform

We founded Disseqt.AI to solve this operational nightmare. We provide the Lean Agentic Service as a Software Workbench that Devops/IT Teams need to safely deploy AI on any platform, ensuring safety, transparency, security, and accountability for Agentic AI applications.

We don’t just build agents; we dissect the risks and provide the patented framework necessary to make them reliable at scale.

Our proprietary technology is purpose-built to engineer trust into non-deterministic systems and augment humans in the loop and in control for EU AI Act and regulator compliance by design :

Disseqt Simulation Driven Risk Testing: We use proprietary technology to replay full process interactions with timestamp, state change, and branching logic. This robust monitoring and red teaming for live agents allows us to find the flaws before they hit production.

Disseqt Malicious Context Engine: We provide multi-turn deep pre-production testing and QA, including Malicious Prompt test sets and Jailbreaking, to secure your agent against hostile inputs.

Disseqt Input and Output Validation QA Workbench: With over 65 validators on user input, we ensure quality, factuality, safety, and style validation of model responses before model execution.

Disseqt Explainability Logging Dashboard: For GRC and EU AI Act compliance, we fully trace agents’ decision chains, providing the necessary audit trail for regulatory compliance.

This engineering rigor delivers tangible value, not just promises: our customers see a 70% reduction in Agentic testing hours and an 80% time saved in deployment.

Moving Beyond the Hype

The path to scaled Agentic AI is paved with rigorous testing and contextual engineering , not prompt hacking. You need to simulate production scenarios, like we do when integrating with MSFT Co-pilot , or securely automating critical workflows, such as a bank’s chargeback process.

If you are a Global System Integrator or an Enterprise IT leader tired of seeing AI deals stall due to compliance concerns, or if you are stuck in the <5% zone of POC failure, the answer isn't a better prompt.

The answer is engineering discipline and augmenting your DevOps or IT teams ability to scale testing,jailbreaking and Redteaming multiple LLMs until you find the best agentic solution for your POCs , enabled by the right platform.

If you’re ready to build an Agentic AI that actually scales across your enterprise , secures your data, and delivers measurable ROI the hard, real way let’s talk.

© DISSEQT AI LIMITED